abbaing

Latest posts from

-

General Systems Theory

Jan 26/00_welcome Before the first line of code. There are no users, no data, no bugs. Just diagrams, ideas and confidence. Someone says “Let’s keep it simple”. Another says “We can always fix it later”. It feels clean, rational.. safe. This is the most dangerous moment. /01_the_fix A decision that feels obvious. When designing a software product, the wrong person proposes an optimization “We should centralize logic”. No code is written yet. Not in size, but in vision. A part is defined in isolation, as if it will never touch the rest. /02_the_reaction This simple decision shapes everything that follows: data models adapt to it, interfaces bend around it. Teams start thinking around it. The system is already constrained. Paths are closed before they exist. The reaction is silent, but real. /03_the_gap What the design doesn’t see. Not a technical gap, but conceptual. This decision assumes that behavior is stable. That users act the same. That context doesn’t matter. That every future change will be local. None of that is true in a system that connects humans, incentives and time. /04_the_pattern An old pattern in a new form, and the pattern is older than software. Factories optimized tasks and broke meaning. Cities optimized flows and created congestion. Institutions optimized rules and lost purpose. Each time designers touched one variable and ignored the web around it. We keep repeating this mistake with better tools and less patience. /05_today How does modern software amplify it? Software products aren’t tools.. they are behavioral engines. They decide who gets attention. They define what workflow means. And they reward some actions but punish others. Yet they are designed by people who thinks in features, not systems. Who confuses clarity with simplicity. /06_the_blind_spot At this point, the failure isn’t lack of knowledge. It’s lack of humility. You cannot design a system by fixing parts, you must understand interactions, delays and feedback before acting. The blind spot is to design with confidence and discover consecuences later. This isn’t engineering, this is gambling. /07_a_different_posture Design in stillness. While everything around moves fast, while deadlines compress and opinions collide, stay in the calm center.. like the eye of a hurricane where nothing is random. From there, ask what decision will touch, not just what it fixes. Assume consecuences and side effects exist even if they are invisible. Listen to complexity instead of fighting it. The most dangerous bugs aren’t in the code. They live in assumptions we never questioned. It isn’t about fixing parts faster, but about understanding consequences earlier. /08_last_line A line you ignore at your own risk. The system doesn’t break when it’s used, it breaks when it’s designed by people who never learned how systems behave. Photo by Pixabay

-

Stay human

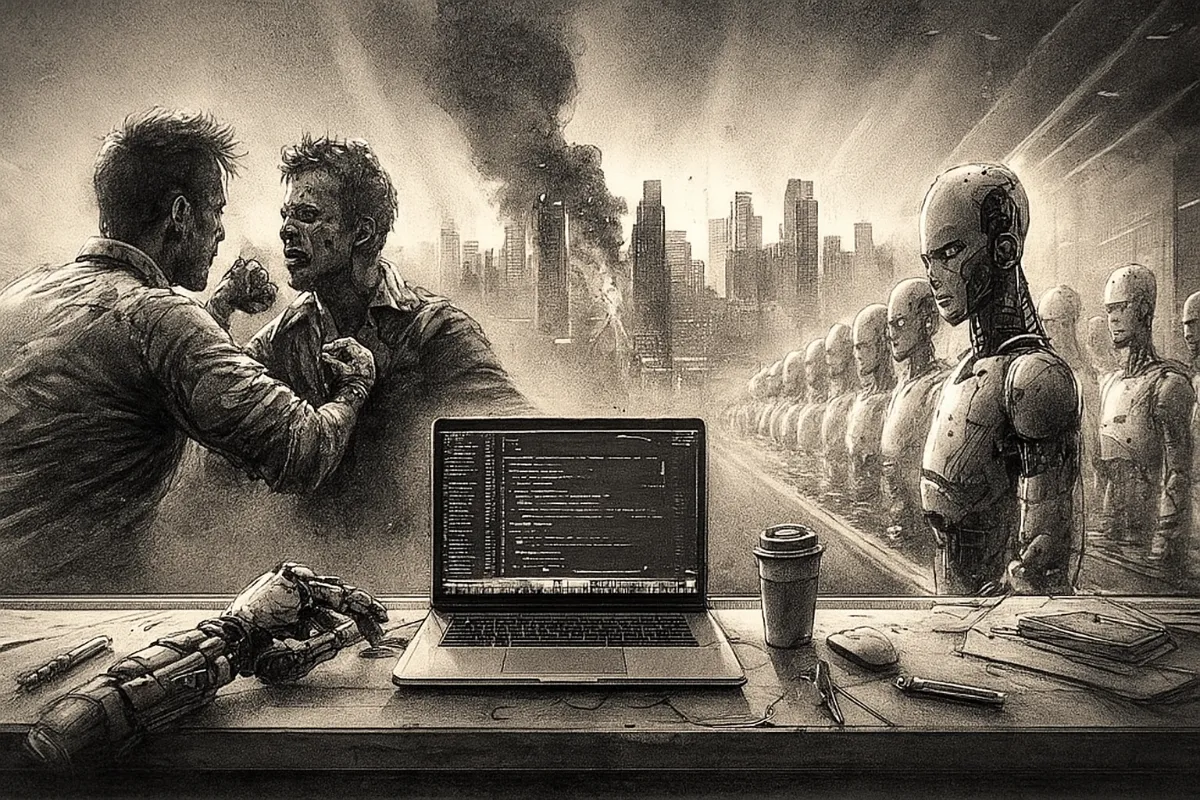

Nov 17Fight club (1.999) begins with an unnamed man who has everything but feels nothing. Good job, clean apartment, perfect routine. Tyler Durden says the part that nobody wants to hear: Advertising has us chasing cars and clothes, working jobs we hate, so we can buy shit we don’t need. His crisis becomes chaos. The narrator starts an underground fight club to feel alive, again. The violence is not the point, the point is losing control and triying to take it back. And honestly, that feeling is growing fast in tech. It’s only after we’ve lost everything that we’re free to do anything. Before factories, a craftsman created a full piece from start to finish. Every object had personality and carried the identity of its creator. The industrial revolution broke this. Machines split the work into tiny steps and workers become operators and lost their connection to what they made. Think of Chaplin in Modern Times (1.936) obsessively tighting bolts on an assembly line until he goes insane. That scene reflected the reality: factories imposed discipline, shifts and disposable workers. Probably, today we see a similar process in software. For many years, programming was creative. We solved problems and built things with our own style. Now, genAI can write big parts of the code in seconds. Companies like the speed, but there’s a hidden cost. Devs start to feel less connected to the work. The risk isn’t that AI replaces us. The risk is to become irrelevant. If machines create more and we create less, our role becomes smaller. We become people who supervise code instead of people who design it. And that kills the sense of purpose. History shows a simple pattern. Every new invent, a new machine, brings more speed but also more distance between humans and the work they do. We still have time to avoid that. The answer is to act like digital craftsmen. Let AI write the boring part, fix small bugs and do repetitive work. But we should stay close to the decisions, the architecture and the ideas. That’s where humans add real value. That’s how we stay human. At the end of Fight club, the narrator stops running. He takes the control back. No drama, no illusion, just a clean break. We need something similar in tech. We don’t need chaos or buildings falling. Just a clear decision about the kind of work we want to do. It should push us to build better, think clearer and create things that no machine can copy. If you don’t shape the work, the work shapes you. Stay human. About Me I’m David, a Senior Software Engineer working at the edge of automation.